A main memory temporarily stores CPU processing.

CPU is an acronym in the English language that refers to the expression central processing unit (in our language, “central processing unit” ). This name refers to hardware whose function is to interpret software instructions through logical and arithmetic operations.

A personal computer can have one CPU or more than one to achieve multiprocessing. Currently, CPUs are located on an integrated circuit ( chip ) known as a microprocessor . It should be noted that a single chip can house several CPUs, giving rise to so-called multicore processors .

CPU configurations

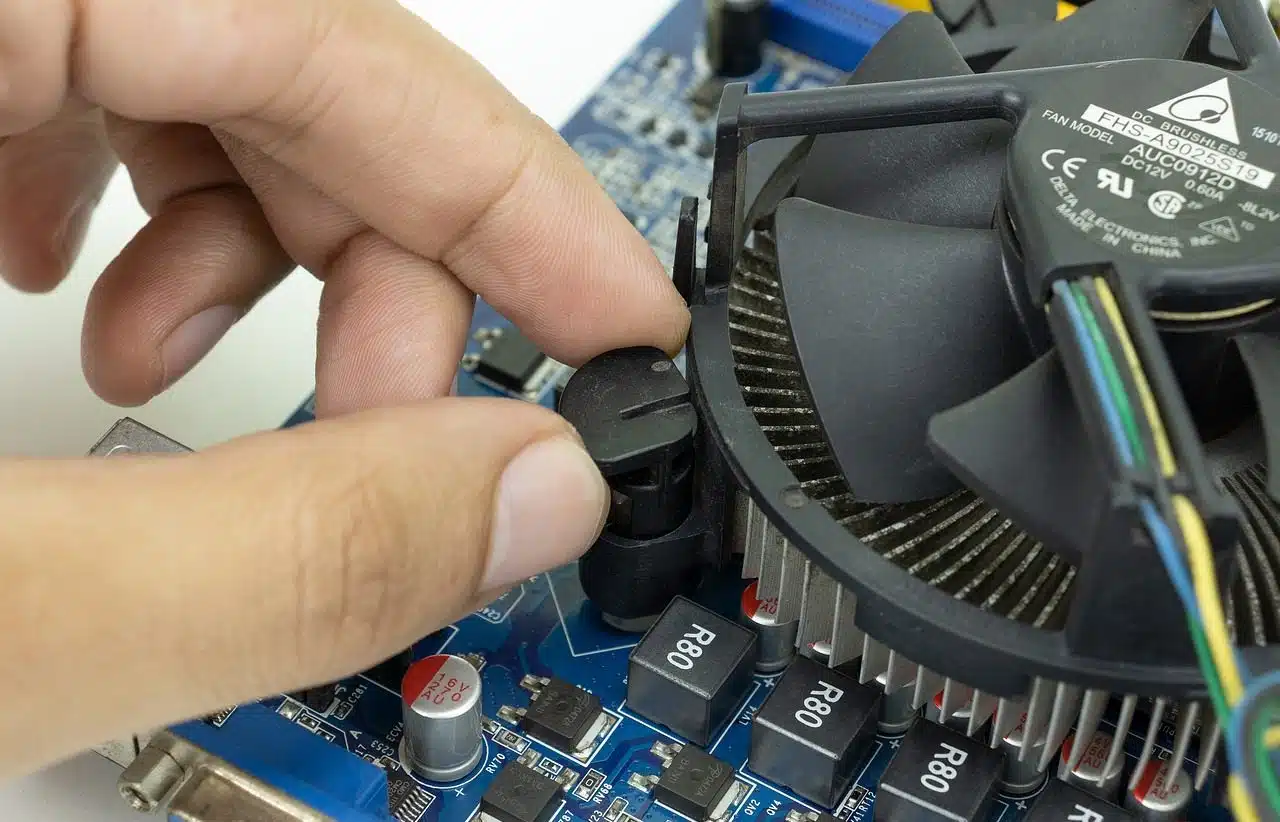

The CPU socket (or CPU slot ) is located on the motherboard and allows the connection of the microprocessor, which in most cases is not soldered so that it can be removed at a later time. Devices such as mobile phones, tablets and consoles, on the other hand, do have their components soldered to the motherboard, since the companies that manufacture them do not expect their customers to modify the products.

This gives rise to two types of configurations : a closed one, in which no modifications are allowed unless the customer wishes to lose the coverage legally offered by the manufacturing company through the warranty; and an open one, which desktop computers usually have, ideal for fans of the computer world who want to renew the components very frequently to always be up to date.

The ALU (Arithmetic Logic Unit) is located in the CPU, which is responsible for solving the logical and arithmetic operations that come to it from the software . The control unit ( CU ), for its part, decodes and executes the instructions it extracts from memory and resorts to the ALU when necessary.

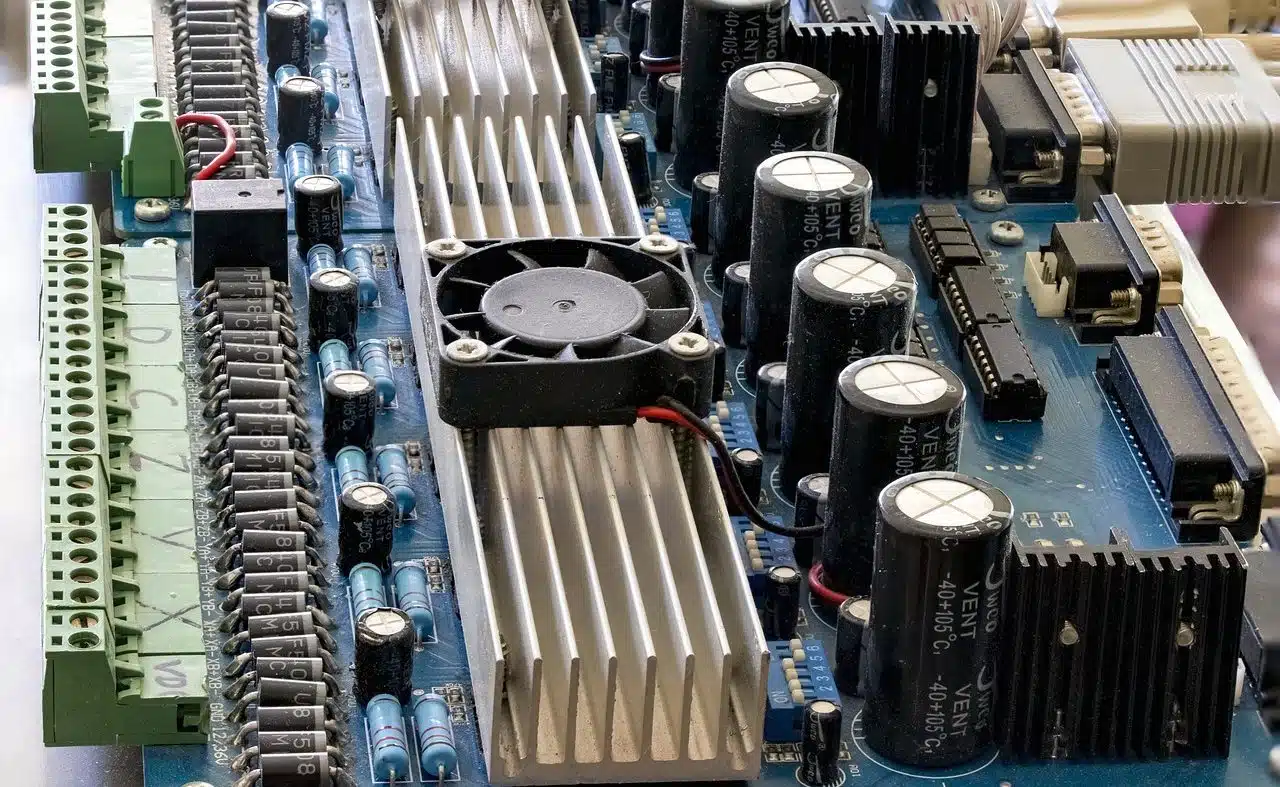

CPU cooling can combine a heat sink and thermal paste next to the CPU fan.

Different architectures

The design and operating structure of a computer is called architecture . The concept refers above all to how the CPU works.

Among the most common is the Harvard architecture , which presents instruction memory physically separated from data memory. All data storage, in this framework, occurs in the CPU.

With the von Neumann architecture , meanwhile, the CPU can read and write data or read an instruction but not simultaneously. This is because data and instructions use the same bus system. The Harvard architecture does allow reading instructions and accessing data memory at the same time.

Another possibility is the so-called modified Harvard architecture , which makes it possible to access the instruction registers in the same way as if they were data. The separation between data and instruction is therefore no longer so marked.

The CPU cache minimizes memory access time.

CPU clock frequency

The clock frequency of the CPU indicates how often the transistors open and close the electrical flow. It is a physical quantity that is expressed in cycles per second ( hertz ).

To optimize the operation of the CPU, parallel processing is used. This involves the simultaneous execution of numerous instructions, dividing large problems into smaller ones that are solved in parallel. There is also the option of overclocking , which consists of increasing the clock frequency (also called clock cycle ) beyond that specified by the manufacturer.

Its functions

At a general level, it can be said that the CPU's main function is the execution of the software (that is, the set of instructions that we know as a computer program). Software representation is done through code stored in the computer 's memory, which the CPU reads, decodes, executes and finally writes.

The faster the CPU speeds up to perform these steps, the faster the user will respond when using a computer. In colloquial language , we can indicate that a powerful CPU allows us to run advanced software quickly.

It is important to note that the CPU does not work alone, but usually divides tasks with other components, such as the GPU (Graphics Processing Unit) . The GPU is considered a coprocessor, since it is a microprocessor that serves to complement the operation of the CPU; This does not mean that it is not important or even essential in the design of a device that needs to print graphics on the screen, like almost all those used today.

GPU accelerated computing

NVIDIA Corporation , a North American company founded in 1993 that specializes in the development of GPUs and integrated circuit technologies, presented in 2007 a concept that it called accelerated computing on the GPU , which consists of using a graphics processing unit to assist the CPU and achieve the acceleration of certain applications in the field of engineering, analysis and deep learning.

It is worth mentioning that deep learning is a group of algorithms with which it is intended to model high-level abstractions through architectures that are composed of multiple nonlinear transformations. In simpler words, it is a series of methods that allow computers to assimilate data so that they can automatically learn to solve certain problems. This task is so complex and demanding that the combination of a CPU with a GPU is ideal to obtain results in less time.