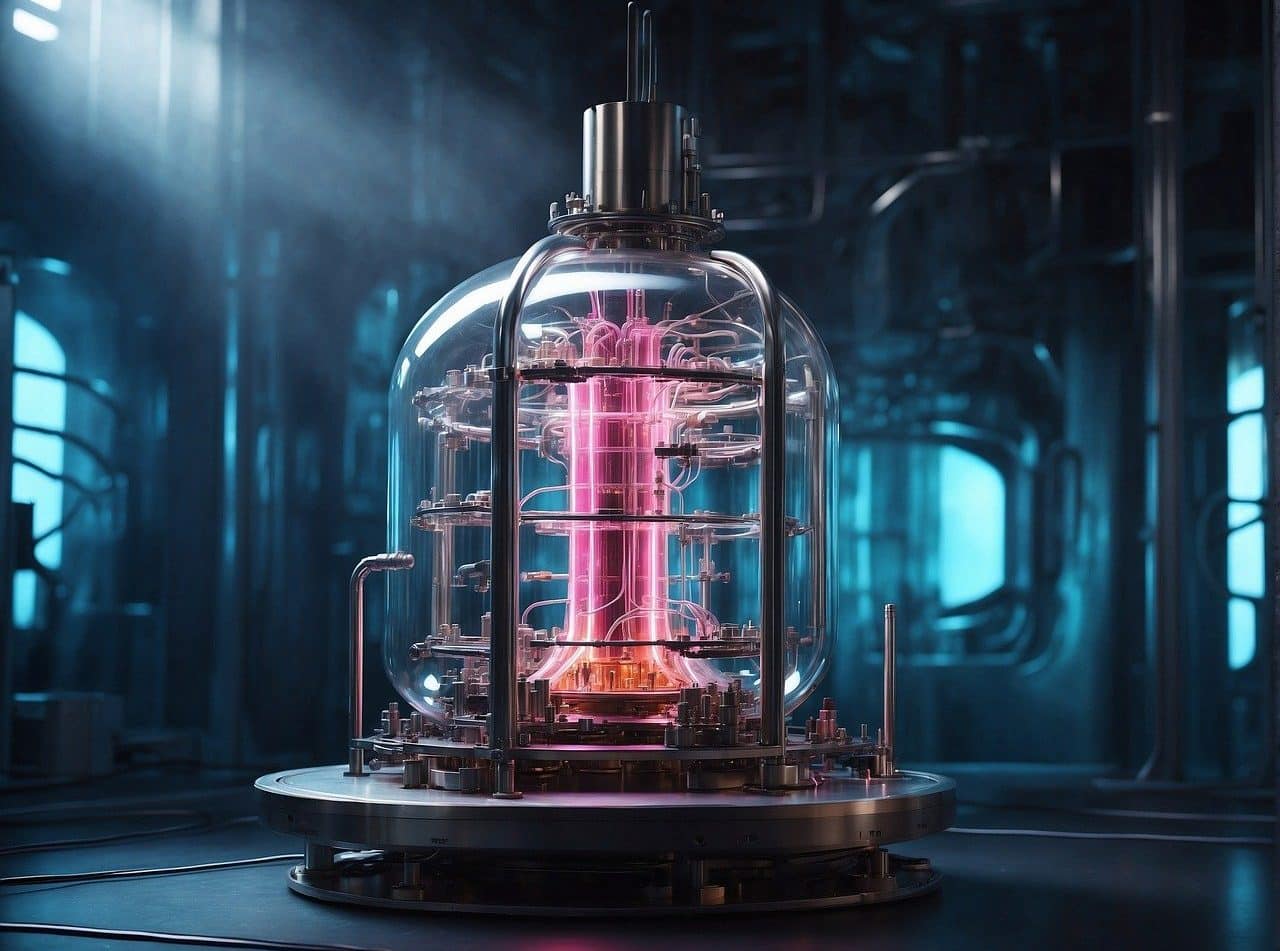

The operating temperature of quantum computing is very low.

Quantum computing is a computing paradigm that is based on quantum entanglement and the superposition of matter . This implies a much greater processing capacity than that offered by traditional or classical computing.

While conventional computing allows the representation of information in a binary way and each bit can only have one state ( 0 or 1 ), quantum computing allows both states to overlap and resorts to the properties of subatomic particles and atoms to process the information . Thus, the basic unit of quantum computing is quantum bits ( qubits ), which can have two states simultaneously.

History of quantum computing

The history of quantum computing began in the 1980s , when pioneering theories about quantum calculations appeared. The American physicist Paul Benioff ( 1930 – 2022 ) is mentioned as a precursor since he managed to demonstrate, at least in theory, that a computer could function according to the principles of quantum mechanics .

What Benioff did was propose a reversible Turing machine model of quantum mechanics . The theoretical development of quantum computing continued with Richard Feynman ( 1918 – 1988 ), who advocated the use of quantum phenomena for the development of computational calculations.

David Deutsch (1953), por su parte, llevó a cabo la descripción de la primera computadora cuántica que podría realizar la simulación de cualquiera otra computadora de este tipo, lo que implica que una máquina de esta clase puede concretar la ejecución de distintos algoritmos cuánticos. Ya en los`90, aparece la figura de Peter Shor (1959), quien ideó un algorithm (elShor's algorithm) para la descomposición en factores a una velocidad muy superior a la alcanzada por una computadora convencional. Lov Grover (1961), por su parte, propuso otro algoritmo (Grover's algorithm) para la búsqueda de datos.

The theory of quantum computing was only put into practice in the late 1990s . In this framework, the propagation of a qubit using amino acids was achieved and a three-qubit machine was created that was able to achieve the execution of the quantum search algorithm developed by Grover .

Shor's algorithm with its quantum factorization, meanwhile, was run for the first time in 2001 . The creation of a qubyte (eight qubits) and a quantum bus were other achievements.

In 2011 , D-Wave (quantum computer company) completed the first sale of a computer of this type. Eight years later, IBM unveiled the first quantum computer for commercial use.

In quantum computing, the basic circuit that operates on a reduced number of qubits is called a quantum gate.

How it works

To understand how quantum computing works, you must first consider how conventional computing works. In it, the minimum unit of information is the bit , which can only take on one of two values (represented by 0 and 1 ). The representation of information, in this context, is achieved through the use and combination of bits.

In quantum computing, however, the minimum unit of information is the qubit (also referred to as qubit ). In this case, there is the principle of quantum superposition which implies that the qubit can take on different values simultaneously. That is, a qubit can be 0 and 1 at the same time. This superposition capacity is equivalent to more capacity when it comes to representing information.

Quantum computing, in short, does not work with electrical voltages (in traditional computing, 0 and 1 are associated with "on" and "off" ). It does so at the quantum level: the minimum value that a magnitude can acquire in a physical system. Qubits, with quantum superposition, allow the development of many operations at the same time.

Another key concept is entanglement . This property alludes to the fact that two correlatively entangled qubits can be subjected to manipulation so that they do the same thing, which results in the possibility of executing operations simultaneously or in parallel.

The operation of quantum computing may also include so-called quantum interference . Thus, the properties inherent to superposition are used to cancel or enhance certain results, being able to increase the quantum efficiency and precision of the calculations.

Quantum computing enables the development of quantum machine learning.

Challenges of quantum computing

Although its history dates back four decades, quantum computing still has a long way to go. There are various obstacles and barriers that are not easy to solve and that threaten faster progress.

On the one hand, a quantum computer needs an ambient temperature close to -273ºC and to be isolated from the Earth's magnetic field. Otherwise, the atoms would move, collide with each other or interact with the environment.

Qubits, in this framework, are sensitive to perturbations. This can cause a quantum error that modifies those superimposed and directs them to a classical state.

We speak of quantum decoherence , meanwhile, to the loss of the reversibility of the steps involved in a quantum algorithm. To achieve information protection against errors caused by decoherence, what is known as quantum error correction is used.